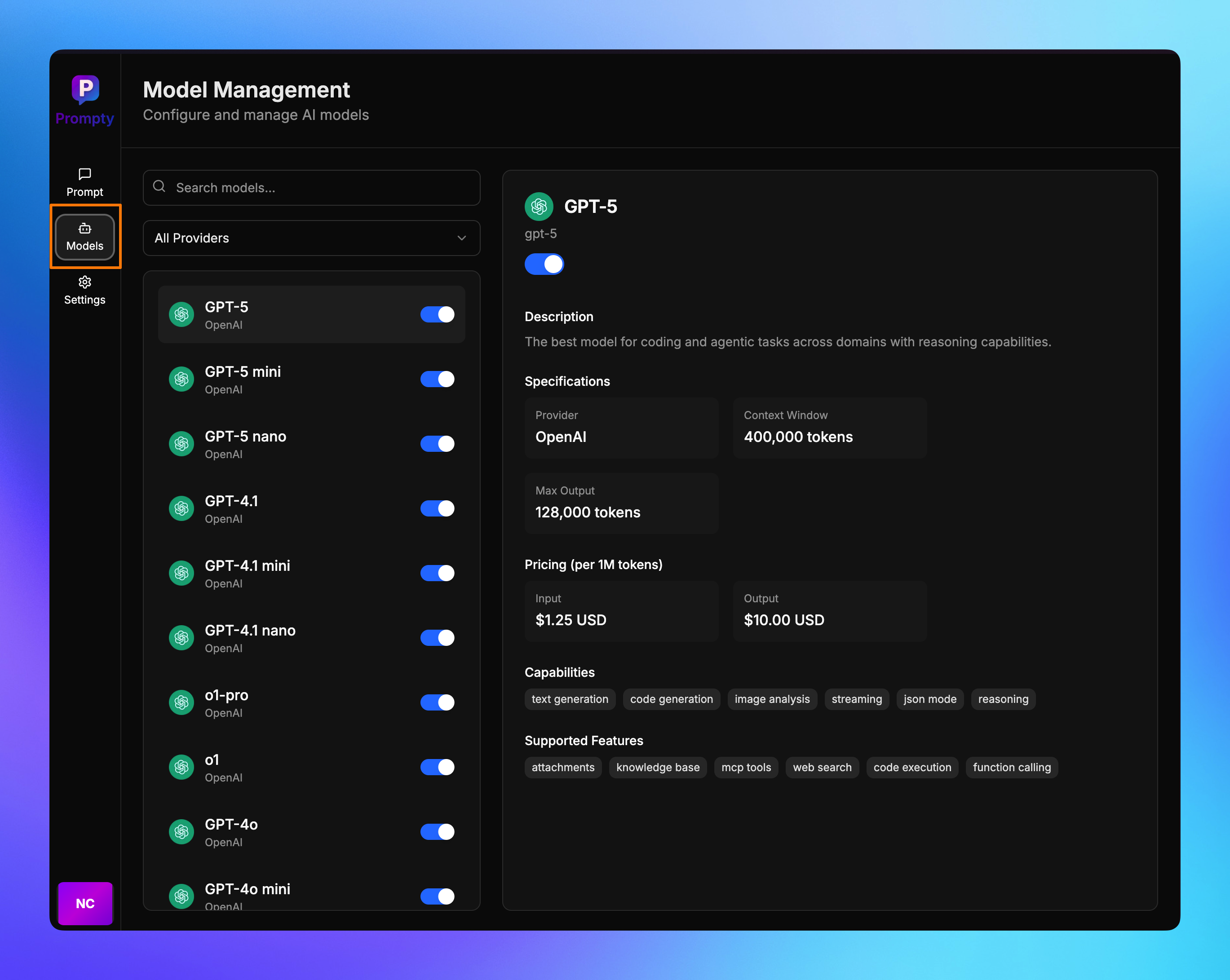

Manage chat models

Learn how to manage which AI models are available in your prompt editor.

Supported Model Types

OpenAI Models

- GPT-5: The best model for coding and agentic tasks across domains with reasoning capabilities

- GPT-5 mini: A faster, cost-efficient version of GPT-5 for well-defined tasks with reasoning capabilities

- GPT-5 nano: Fastest, most cost-efficient version of GPT-5 for summarization and classification tasks

- GPT-4.1: Fast, highly intelligent model with largest context window. Our flagship model for complex tasks

- GPT-4.1 mini: Balanced for intelligence, speed, and cost that makes it an attractive model for many use cases

- GPT-4.1 nano: Fastest, most cost-effective GPT-4.1 model with 1M+ context window and multimodal capabilities

- o1-pro: Version of o1 with more compute for better responses with complex reasoning

- o1: Previous full o-series reasoning model with reinforcement learning to think before answering

- GPT-4o: Fast, intelligent, flexible GPT model with text and image inputs and text outputs

- GPT-4o mini: Fast, affordable small model for focused tasks with 128K context window

- GPT-4 Turbo: Next generation of GPT-4, designed to be cheaper and better version

- GPT-4: An older high-intelligence GPT model

- GPT-3.5 Turbo: Legacy GPT model for cheaper chat and non-chat tasks (deprecated)

Anthropic Models

- Claude Opus 4.1: Anthropic’s most capable and intelligent model yet with superior reasoning capabilities

- Claude Opus 4: Anthropic’s most advanced model for complex reasoning and analysis

- Claude Sonnet 4: Balanced performance and cost for a wide range of tasks

- Claude Sonnet 3.7: Stable Sonnet version, efficient for most use cases

- Claude Sonnet 3.5: Cost-effective choice for general tasks

- Claude Haiku 3.5: Fast and economical, ideal for lightweight tasks

Google Models

- Gemini 2.5 Pro: Google’s most capable model with reasoning, coding, and multimodal capabilities

- Gemini 2.5 Flash: Efficient and cost-effective model with 1M token context window and multimodal capabilities

- Gemini 2.5 Flash-Lite: Text-only version of Gemini 2.5 Flash optimized for speed and cost-effectiveness

- Gemini 2.0 Flash: Balanced performance model with multimodal capabilities and 128K context window

- Gemini 2.0 Flash-Lite: Text-only version of Gemini 2.0 Flash optimized for speed and cost-effectiveness

xAI Models

- Grok 4: Next-generation Grok model with strong reasoning and coding with enterprise data handling

- Grok Code Fast 1: Speedy, economical reasoning model specialized for agentic coding with 256k context

- Grok 3: Excels at enterprise tasks like data extraction, coding, and summarization with strong domain knowledge

- Grok 3 Fast: Fast Grok 3 variant for coding, extraction, summarization with deep domain knowledge

- Grok 3 Mini: A lightweight model that thinks before responding, fast and great for logic-based tasks

Organizing Models

Prompty provides flexible model management capabilities that allow you to customize which models are available in your workflow.

Enable/Disable Models

You can control which models appear in the prompt editor by enabling or disabling specific models:

- Enabled Models: These models will appear in the model selection dropdown in the prompt editor

- Disabled Models: These models will be hidden from the prompt editor interface but remain available for configuration

How to Enable/Disable Models

- Navigate to the model management section on Siderbar

- Find the model you want to configure

- Toggle the enable/disable switch

- Changes are applied immediately - disabled models will no longer appear in the prompt editor

Last updated on